Why Azure Blob Storage?

Azure Blob Storage is a nice offering of Microsoft to store various types of data in the cloud. Having your Java application use Azure Blob Storage as the way to persist generated artifacts like files or movies is an solution that has an easy implementation in my opinion. In this post I will be looking at the practicalities for Java application connecting to Azure Blob Storage.

Azure Blob Storage can serve these files or images directly to the browser from pretty much everywhere in the world. Naturally there are client libraries for various languages and a REST API to write and read from the storage.

Basics for connecting to Azure Blob Storage

The use of Azure Storage requires a storage account. This account together with an access key, that can be retrieved from the Security + networking page in the Azure portal, is enough to setup a connection to a storage.

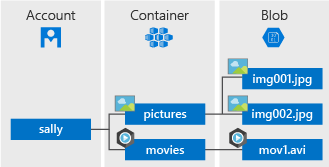

With the connection established a container can be created in which the Blob’s can be stored. Below the object model of the blob storage is shown which provides idea how you can structure the blobs stored and show the three important models in Azure Blob storage.

Making it work with Java

For the Java part the question is now how do I interact with this beautiful service? A couple of dependencies are needed to setup the connection and do something with the response. This is maybe not that straight forward as other blogs and manuals present it. These are the dependencies in Maven that I used in a project which uploads and reads blobs from the storage.

<properties>

<stax2.version>4.2.1</stax2.version>

<woodstox.version>6.2.4</woodstox.version>

</properties>

<dependencies>

<dependency>

<groupId>com.azure</groupId>

<artifactId>azure-storage-blob</artifactId>

<version>12.13.0</version>

</dependency>

<dependency>

<groupId>com.azure</groupId>

<artifactId>azure-storage-common</artifactId>

<version>12.13.0</version>

</dependency>

<dependency>

<groupId>com.azure</groupId>

<artifactId>azure-core</artifactId>

<version>1.20.0</version>

<exclusions>

<exclusion>

<groupId>com.fasterxml.jackson.dataformat</groupId>

<artifactId>jackson-dataformat-xml</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>com.fasterxml.woodstox</groupId>

<artifactId>woodstox-core</artifactId>

<version>${woodstox.version}</version>

</dependency>

<dependency>

<groupId>org.codehaus.woodstox</groupId>

<artifactId>stax2-api</artifactId>

<version>${stax2.version}</version>

</dependency>

</dependencies>

When working with an application server like WildFly, or Spring, dependencies are already provided because they are used in the web server. Often certain dependencies of your application overlap and you let the build tool know that a dependency will already be provided in runtime. The Java client of Azure Storage has a dependency on jackson-dataformat-xml and the web server that was used in the project has provided libraries dependencies. But the annoying thing was that the web server was missing parser libraries to make jackson-dataformat-xml function correctly for Azure storage. And yes this caused a NoClassDefFoundError on runtime which is a dreaded exception. A Maven solution for the dependency problem can look like this:

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-dependency-plugin</artifactId>

<configuration>

<artifactItems>

<artifactItem>

<groupId>org.codehaus.woodstox</groupId>

<artifactId>stax2-api</artifactId>

<version>${stax2.version}</version>

<type>jar</type>

<overWrite>false</overWrite>

<destFileName>stax2-api.jar</destFileName>

</artifactItem>

<artifactItem>

<groupId>com.fasterxml.woodstox</groupId>

<artifactId>woodstox-core</artifactId>

<version>${woodstox.version}</version>

<type>jar</type>

<overWrite>false</overWrite>

<destFileName>woodstox-core.jar</destFileName>

</artifactItem>

</artifactItems>

<outputDirectory>${project.build.directory}/additional-jars</outputDirectory>

</configuration>

</plugin>

Then copy these files to the web server library directory before startup. Having the dependencies present we can move along to setting up a connection. To access a storage container using the Java client a storage account is needed and a shared access signature token. With the storage account an URL can be constructed like this ENDPOINT_STRING_TEMPLATE = https://%s.blob.core.windows.net which the client connects to. Using the client library a BlobContainerClient can be created which than allows the client to build a BlobClient which is used to upload data.

String storageAccount = System.getenv("BLOB_CONTAINER_SA");

String accountKey = System.getenv("BLOB_CONTAINER_KEY");

var storageSharedKeyCredential = new StorageSharedKeyCredential(storageAccount, accountKey);

var blobServiceClient = new BlobServiceClientBuilder()

.credential(storageSharedKeyCredential)

.endpoint(String.format(ENDPOINT_STRING_TEMPLATE, storageAccount))

.buildClient();

var blobContainerClient = blobServiceClient.getBlobContainerClient(containerName);

if (!blobContainerClient.exists()) {

blobContainerClient = blobServiceClient.createBlobContainer(containerName);

}

var blobClient = blobContainerClient.getBlobClient("directory/" + file.getFilename())

blobClient.upload(BinaryData.fromString("interesting data"));

From this example you can see that a hierarchical directory structure can be created in a container to categorize the objects.

The above blobClient.upload method won’t overwrite a file by default and a boolean can be provided as second argument to set this explicitly. In the case of my project we uploaded multiple blobs and wanted to make sure no previous blobs were remaining in the container. For this a delete method is available on the BlobClient and with the following code one can delete all

existing blobs in a container.

blobContainerClient.listBlobs().forEach(blobItem -> {

var blobClient = blobContainerClient.getBlobClient(blobItem.getName());

blobClient.delete();

});

How to test?

One of the nicest things I experienced when developing against the blob storage service is the presence of Azurite an emulator that can be run locally or in Docker. Azurite can emulate the behavior of the actual Blob service locally so you are able to setup an integration test without depending on an actual Azure account. The fake account used by Azurite can be configured and data can be uploaded to, and retrieved from, this service. The docker configuration needed for Azurite can be added to the docker-compose.yml like this:

azurite:

image: mcr.microsoft.com/azure-storage/azurite

hostname: azurite

restart: always

command: "azurite --loose --blobHost 0.0.0.0 --blobPort 10000"

ports:

- "10000:10000"

Docker environment configuration can be added to provide the values for the different host and the different credentials. These are the default development account credentials of Azurite.

AZURE_STORAGE_HOST: azurite

AZURE_STORAGE_PORT: 10000

BLOB_CONTAINER_SA: devstoreaccount1

BLOB_CONTAINER_KEY: Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw==

So now you can test it before deployment to the outside world which is great.

Conclusion

A storage service in the cloud is nothing new and there are numureous offerings. So I can not tell you that Azure Storage is the right choice for you. On the other hand I am conviced that the integration with a Java application is not difficult and enough functionality and libraries are available to allow various solutions.

Extra's

There is a GUI available to see what’s stored in the Azure Storage containers and it allows for manual adding and deleting. This is the storage explorer and can be downloaded here: https://azure.microsoft.com/en-us/features/storage-explorer/

For more information about Azurite : https://docs.microsoft.com/en-us/azure/storage/common/storage-use-azurite?tabs=visual-studio

Azurite header image License, creator

The Azure storage libraries for Java:

https://github.com/Azure/azure-sdk-for-java/tree/main/sdk/storage

Discussion (0)